Moving 27K unique images from Spatie medialibrary local to s3 storage using Laravel Queue

Published 17 September 2020 15:00 (12-minute read)

We didn't expect that the project ever reached this milestone, so we stored all the images on the server itself. After around 2 years the monitoring service told us we had around 180GB of images stored in the project. But why are we now moving the images to s3 instead of local storage?

Why did we moved to s3?

The server we used has around 300GB disk space for the project, it handles all the images we needed to server very well. But after a while, we got errors from our backup script that the backups could not be completed due to no space left on the device. So we thought of why this could happen, enough space you would say. But apparently, it's not for this project.

When we took a look at the DigitalOcean dashboard we saw that the server is almost every month hitting the 90% data transfer limit.

Ps, it's also possible to move to other disk/filesystems using this method (it has to recognize the file structure - so Google Drive/G Suite may be possible with custom driver support).

How we moved to s3

We decided to move the images using the following tools:

- Laravel Eloquent Chunking

- Laravel Horizon for queue working

Prepare the connections for the movement:

//file: config/filesystems.php

<?php

return [

'disks' => [

//...

'medialibrary' => [

'driver' => 'local',

'root' => storage_path('app/public'),

'url' => env('APP_URL').'/storage',

'visibility' => 'public',

],

//...

's3' => [

'driver' => 's3',

'key' => env('AWS_ACCESS_KEY_ID'),

'secret' => env('AWS_SECRET_ACCESS_KEY'),

'region' => env('AWS_DEFAULT_REGION'),

'bucket' => env('AWS_BUCKET'),

'url' => env('AWS_URL'),

'endpoint'=> env('AWS_ENDPOINT'),

'visibility' => 'public',

],

//...

],

];

And of course, set up your .env file with the correct AWS s3 credentials (or any other s3 provider).

Dispatching media items

All media items within Spatie medialibrary are stored in a table, "media". When loading all the unique images in the system we didn't want to overload the server, so we decided to chunk the results into results of 1.000 records.

We created a command to start the initial movement process, we want this command to be as dynamic as it can be. So we made the from & to disk as a parameter (version 1, for smaller size databases):

// file: app/Console/Commands/MoveMediaToDiskCommand.php

<?php

namespace App\Console\Commands;

use App\Jobs\MoveMediaToDiskJob;

use Illuminate\Console\Command;

use Illuminate\Support\Facades\Storage;

use Spatie\MediaLibrary\Models\Media;

class MoveMediaToDiskCommand extends Command

{

protected $signature = 'move-media-to-disk {fromDisk} {toDisk}';

protected $description = 'Move media from disk to a new disk';

public function __construct()

{

parent::__construct();

}

/**

* Execute the console command.

*

* @return mixed

* @throws \Exception

*/

public function handle()

{

$diskNameFrom = $this->argument('fromDisk');

$diskNameTo = $this->argument('toDisk');

$this->checkIfDiskExists($diskNameFrom);

$this->checkIfDiskExists($diskNameTo);

Media::where('disk', $diskNameFrom)

->chunk(1000, function ($medias) use ($diskNameFrom, $diskNameTo) {

/** @var Media $media */

foreach ($medias as $media) {

dispatch(new MoveMediaToDiskJob($media, $diskNameFrom, $diskNameTo));

}

});

}

/**

* Check if disks are set in the config/filesystem.

*

* @param $diskName

* @throws \Exception

*/

private function checkIfDiskExists($diskName) {

if(!config("filesystems.disks.{$diskName}.driver")) {

throw new \Exception("Disk driver for disk `{$diskName}` not set.");

}

}

}

For larger applications it's recommended to use the power of the database indexes (thanks for the tip Oliver Kurmis). It dispatches a job to get the first 1000 records and after that's finished, it will check if it need to dispatch another job to move the next 1000 records.

// file: app/Console/Commands/MoveMediaToDiskCommand.php

<?php

namespace App\Console\Commands;

use App\Jobs\MoveMediaToDiskJob;

use App\Jobs\CollectMediaToMoveJob;

use Illuminate\Console\Command;

use Illuminate\Support\Facades\Storage;

use Spatie\MediaLibrary\Models\Media;

class MoveMediaToDiskCommand extends Command

{

// NOTE: this is a version for larger applications with millions of records (that uses the power of the database indexes).

protected $signature = 'move-media-to-disk {fromDisk} {toDisk}';

protected $description = 'Move media from disk to a new disk';

public function __construct(){

parent::__construct();

}

/**

* Execute the console command.

*

* @return mixed

* @throws \Exception

*/

public function handle(){

$diskNameFrom = $this->argument('fromDisk');

$diskNameTo = $this->argument('toDisk');

$this->checkIfDiskExists($diskNameFrom);

$this->checkIfDiskExists($diskNameTo);

dispatch(new CollectMediaToMoveJob($diskNameFrom, $diskNameTo));

}

/**

* Check if disks are set in the config/filesystem.

*

* @param $diskName

* @throws \Exception

*/

private function checkIfDiskExists($diskName) {

if(!config("filesystems.disks.{$diskName}.driver")) {

throw new \Exception("Disk driver for disk `{$diskName}` not set.");

}

}

}

This command dispatches the first job to move the first 1000 media items to other disk.

// file: app/Jobs/CollectMediaToMoveJob.php

<?php

namespace App\Jobs;

use Illuminate\Bus\Queueable;

use Illuminate\Contracts\Queue\ShouldQueue;

use Illuminate\Foundation\Bus\Dispatchable;

use Illuminate\Queue\InteractsWithQueue;

use Illuminate\Queue\SerializesModels;

class CollectMediaToMoveJob implements ShouldQueue

{

// NOTE: this is a version for larger applications with millions of records (that uses the power of the database indexes).

use Dispatchable, InteractsWithQueue, Queueable, SerializesModels;

public $diskNameFrom;

public $diskNameTo;

public $offset;

/**

* Create a new job instance.

*

* @param $diskNameFrom

* @param $diskNameTo

* @param $offset

*/

public function __construct($diskNameFrom, $diskNameTo, $offset = null) {

$this->diskNameFrom = $diskNameFrom;

$this->diskNameTo = $diskNameTo;

$this->offset = $offset;

}

/**

* Execute the job.

*

* @return void

* @throws \Exception

*/

public function handle() {

$recordsToMove = Media::where('disk', $diskNameFrom);

if($this->offset) {

$recordsToMove->where('id', '>', $this->offset);

}

$recordsToMove = $recordsToMove->limit($limit = 1000)

->get();

if($recordsToMove->count() == $limit) {

dispatch(new CollectMediaToMoveJob($diskNameFrom, $diskNameTo, $recordsToMove->last()->id));

}

}

}

When there are no items left to move, it will finish this job without dispatching a new job for the next 1000 records.

Make a job that will move all generated sources for that media item to the new disk. For each unique file created by the medialibrary collection, it will dispatch a job. When you dispatch a unique job for each file of that media item you'll be able to retry the specific failed job (and skip all successfully moved items).

It also runs a job after all other move jobs are successfully finished, that's to update the used disk in the database.

// file: app/Jobs/MoveMediaFileToDiskJob.php

<?php

namespace App\Jobs;

use Illuminate\Bus\Queueable;

use Illuminate\Contracts\Queue\ShouldQueue;

use Illuminate\Foundation\Bus\Dispatchable;

use Illuminate\Queue\InteractsWithQueue;

use Illuminate\Queue\SerializesModels;

use Illuminate\Support\Facades\Bus;

use Illuminate\Support\Facades\Storage;

use Spatie\MediaLibrary\Models\Media;

use Spatie\MediaLibrary\PathGenerator\PathGeneratorFactory;

class MoveMediaToDiskJob implements ShouldQueue

{

use Dispatchable, InteractsWithQueue, Queueable, SerializesModels;

public $diskNameFrom;

public $diskNameTo;

public $media;

/**

* Create a new job instance.

*

* @param Media $media

* @param $diskNameFrom

* @param $diskNameTo

*/

public function __construct(Media $media, $diskNameFrom, $diskNameTo)

{

$this->media = $media;

$this->diskNameFrom = $diskNameFrom;

$this->diskNameTo = $diskNameTo;

}

/**

* Execute the job.

*

* @return void

* @throws \Exception

*/

public function handle()

{

// check if media still on same disk

if($this->media->disk != $this->diskNameFrom) {

throw new \Exception("Current media disk `{$this->media->disk}` is not the expected `{$this->diskNameFrom}` disk.");

}

// generate the path to the media

$mediaPath = PathGeneratorFactory::create()

->getPath($this->media);

$diskFrom = Storage::disk($this->diskNameFrom);

$filesInDirectory = $diskFrom->allFiles($mediaPath);

$jobs = collect();

// dispatch jobs foreach file (recursive) in the storage map for the media item

foreach ($filesInDirectory as $fileInDirectory) {

$jobs->push(new MoveMediaFileToDiskJob($this->diskNameFrom, $this->diskNameTo, $fileInDirectory));

}

if($jobs->count() == 0) {

throw new \Exception('No jobs found to dispatch.');

}

// dispatch job to update the media disk in the database so it will load from the new storage disk

$jobs->push(new MoveMediaUpdateDiskJob($this->media, $this->diskNameTo));

Bus::chain($jobs)->dispatch();

}

}

In the next job, the actual move command is called. This will move (or in our case, copy, because we want to keep the data locally in case it magically failed) the file between the disks.

// file: app/Jobs/MoveMediaFileToDiskJob.php

<?php

namespace App\Jobs;

use Illuminate\Bus\Queueable;

use Illuminate\Contracts\Filesystem\FileNotFoundException;

use Illuminate\Contracts\Queue\ShouldQueue;

use Illuminate\Foundation\Bus\Dispatchable;

use Illuminate\Queue\InteractsWithQueue;

use Illuminate\Queue\SerializesModels;

use Illuminate\Support\Facades\Storage;

class MoveMediaFileToDiskJob implements ShouldQueue

{

use Dispatchable, InteractsWithQueue, Queueable, SerializesModels;

public $diskNameFrom;

public $diskNameTo;

public $filename;

/**

* Create a new job instance.

*

* @param $diskNameFrom

* @param $diskNameTo

* @param $filename

*/

public function __construct($diskNameFrom, $diskNameTo, $filename)

{

$this->diskNameFrom = $diskNameFrom;

$this->diskNameTo = $diskNameTo;

$this->filename = $filename;

}

/**

* Execute the job.

*

* @throws FileNotFoundException

* @return void

*/

public function handle()

{

$diskFrom = Storage::disk($this->diskNameFrom);

$diskTo = Storage::disk($this->diskNameTo);

$diskTo->put(

$this->filename,

$diskFrom->readStream($this->filename)

);

}

}

Next, the last job that will update the used disk for that media item in the database.

// file: app/Jobs/MoveMediaUpdateDiskJob.php

<?php

namespace App\Jobs;

use Illuminate\Bus\Queueable;

use Illuminate\Contracts\Queue\ShouldQueue;

use Illuminate\Foundation\Bus\Dispatchable;

use Illuminate\Queue\InteractsWithQueue;

use Illuminate\Queue\SerializesModels;

use Spatie\MediaLibrary\Models\Media;

class MoveMediaUpdateDiskJob implements ShouldQueue

{

use Dispatchable, InteractsWithQueue, Queueable, SerializesModels;

public $media;

public $diskNameTo;

/**

* Create a new job instance.

*

* @param Media $media

* @param $diskNameTo

*/

public function __construct(Media $media, $diskNameTo)

{

$this->media = $media;

$this->diskNameTo = $diskNameTo;

}

/**

* Execute the job.

*

* @return void

*/

public function handle()

{

$this->media->disk = $this->diskNameTo;

$this->media->save();

}

}

After you are done, you can call the following command. It starts the process and process all the files.

php artisan move-media-to-disk public s3

Queue working

We didn't want to miss any data during the production movement, so we decided to use the power of Laravel queue system. There are around 27.000 unique media items in the database/storage folder.

In our case, we generated for all those unique images 6 formats (using Spatie medialibrary, see example below), which means we did in total 189K jobs to move all the items from local storage to s3.

The 189K jobs came from: (27.000 * (6 + 1)) = 189.000

- 27.000 unique media items

- 6 media conversions

- 1 store the original file

// file: app/Models/ImageGallery.php

<?php

namespace App;

use Illuminate\Database\Eloquent\Model;

use Spatie\MediaLibrary\HasMedia\HasMedia;

use Spatie\MediaLibrary\HasMedia\HasMediaTrait;

use Spatie\MediaLibrary\Models\Media;

class ImageGallery extends Model implements HasMedia

{

use HasMediaTrait;

// medialibrary

public function registerMediaConversions(Media $media = null)

{

$this->addMediaConversion('thumb')

->width(300);

$this->addMediaConversion('size-sm')

->width(600);

$this->addMediaConversion('size-md')

->width(800);

$this->addMediaConversion('size-lg')

->width(1000);

$this->addMediaConversion('size-xl')

->width(1400);

$this->addMediaConversion('size-xl2')

->width(2000);

}

public function registerMediaCollections()

{

$this->addMediaCollection('images');

}

}

It's possible to convert the images to webp using the medialibrary from Spatie. But we didn't use this for the project. We use Cloudflare Pro as caching & protection for our Laravel application, this will automatically apply the webp format if it can improve the file size (for example, when webp is bigger than the original file it will not convert it to webp).

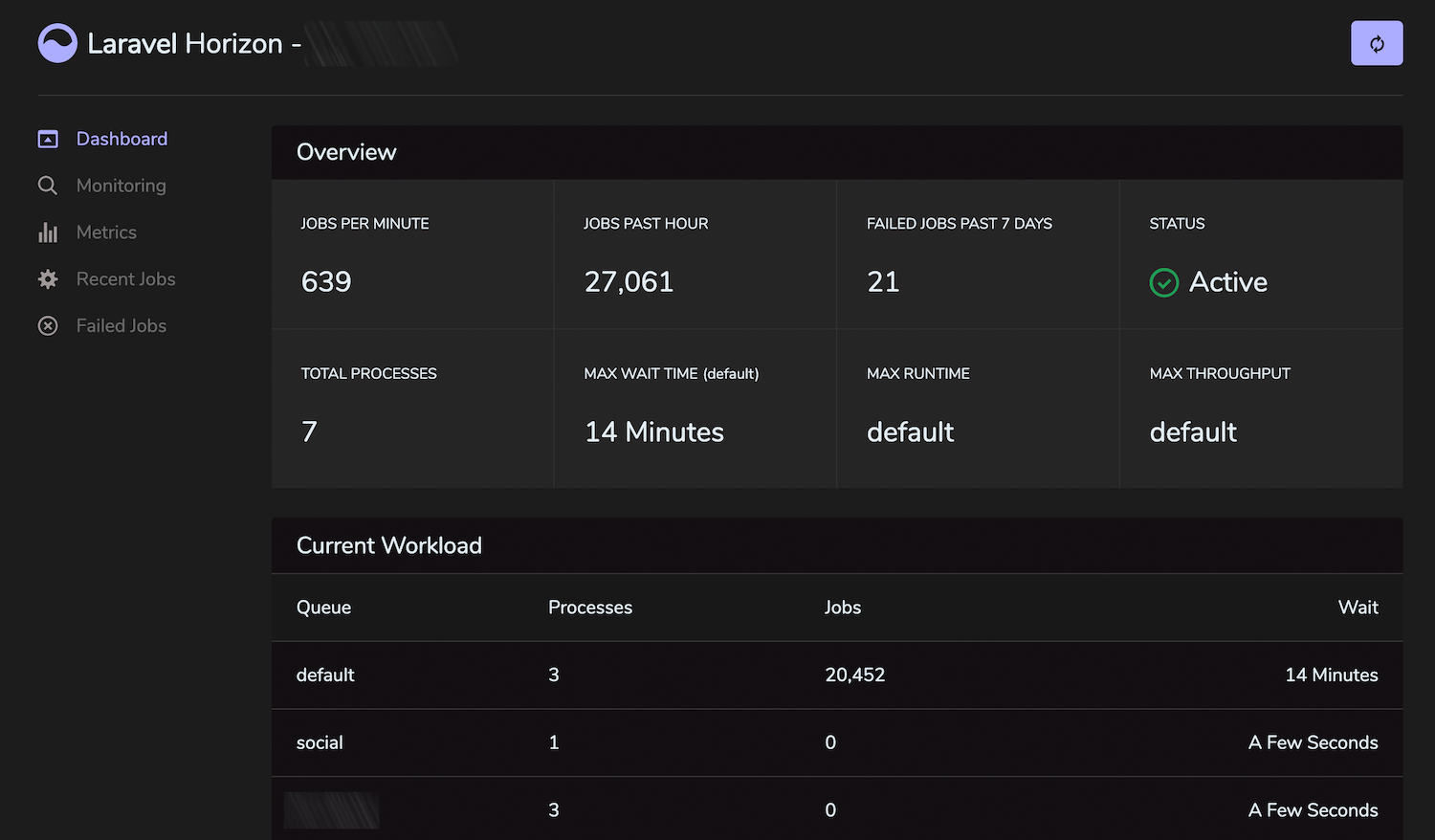

Horizon watching

After we executed the command we monitored the results in Laravel Horizon. This is easy to do, even when jobs fail we can simply restart it failed jobs within the Horizon dashboard (screenshot from a smaller dataset than the production, around 3.800 unique media items).

Useful sources

Do you want to learn more about some techniques used in this blogpost? Take a look at the following resources.

- Eloquent Course by Jonathan Reinink

- Laravel Queues in Action by Mohamed Said

- Job chaining in Laravel 8

- Laravel Horizon

- Spatie medialibrary

Other solutions we looked at

Laravel is not the only solution we looked into, these services are also considered during the movement. Unfortunately, they didn't fit well with our solution.

- "aws s3 cli", during testing we got some random timeouts, so we quickly started looking for something else. We wanted to keep track of the migration process without manually validating which files did/didn't move successfully.

- "rclone", the same reason as aws s3 cli client. We want to keep track of the migration process.

Challenges

There are a few things to keep in mind when working on this:

- keep track of the successful & failed file movements, that are solved by using the power of Laravel Queue system with Horizon as a dashboard and also splitting the jobs into unique items to exactly know which move job failed.

- don't recreate the images, at first we thought about moving only the original file to the s3 bucket and leaving the other 6 media conversions behind. But then realized that the images needed to be recreated what was extra workload on the server and also the same amount of s3 requests, so it was faster/less chance of a failure to move the generated conversions directly.

- use the power of Laravel 8 Job chaining, this new feature keeps track of previous jobs in the chain to be sure all jobs are processed successfully. This helps us to keep the service up and keep serving the image for our site.

- cache the s3 resources by Cloudflare, our application has a lot of visitors that request image data, so why not cache it by Cloudflare. It's edge optimized and free for caching. The costs of aws s3 are based on the amount of data stored in s3 and transferred when it's requested by the user. I've created a stateless route (that doesn't send a session with the response) to be able to cache the image by Cloudflare (read more how I do this here).

So why this blog post? I've posted on Twitter what the results where using Laravel Horizon to move around 27K images from Spatie Medialibrary local to s3 storage. It moved all those images in around 12 minutes. The post got much attention that I decided to write this blog post about it to inform interested visitors about my discoveries.